Sensor Based Liveness Verifications for Mobile Data

Citizen journalism includes videos of conflicts in areas with limited professional journalism representation to spontaneous events (e.g., tsunamis, earthquakes, meteorite landings, authority abuse). Such videos are often distributed through sites such as CNN's iReport, NBC's Stringwire or YouTube's CitizenTube, and are admissible on news networks.

This development is raising important questions concerning the credibility of impactful videos, e.g., fake news. The potential impact of such videos, coupled with the use of financial incentives, can motivate workers to fabricate data. Videos from other sources can be copied, projected and recaptured, cut and stitched before being uploaded as genuine on social media sites. For instance, plagiarized videos with fabricated location and time stamps can be created through ``projection'' attacks: the attacker uses specialized apps to set the GPS position of the device to a desired location, then uses the device to shoot a projected version of the target video.

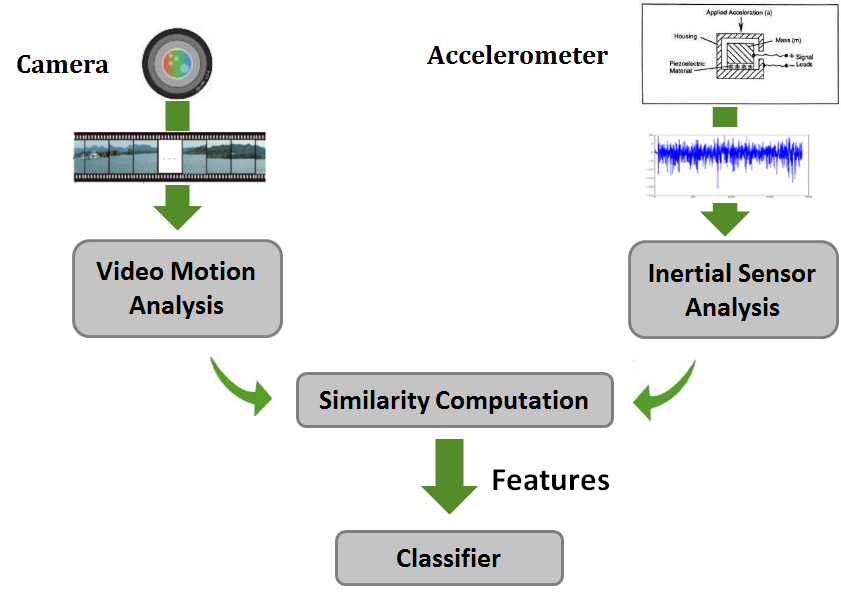

To address this problem, in this project we exploit the observation that for plagiarized videos, the motion encoded in the video stream is likely inconsistent with the motion from the inertial sensor streams (e.g., accelerometer) of the device. As illustrated below, we leverage the motion sensors of mobile devices in order to verify the liveness of the video streams. We exploit the inherent movement of the user's hand when shooting a video, to verify the the consistency between the inferred motion from captured video and inertial sensor signals. We use the intuition that being simultaneously captured, these signals will necessarily bear certain relations, that are difficult to fabricate and emulate. In this case, the movement of the scene in the video stream should have similarities with the movement of the device that registers at the motion sensors.

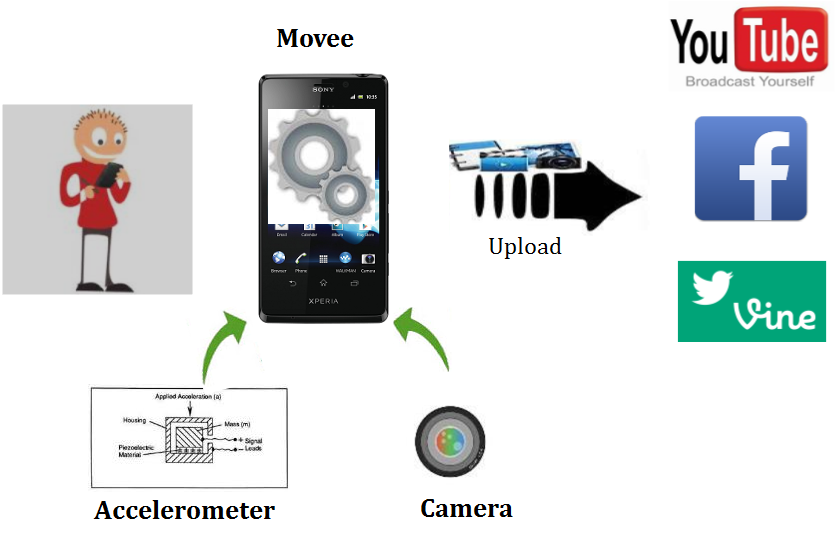

Movee. We developed Movee, a system that provides CAPTCHA like verifications, by including the user, through her mobile device, into the verification process. However, instead of using the cognitive strength of humans to interpret visual information, we rely on their innately flawed ability to hold a camera still.

Vamos. To address these limitations, we have introduced Vamos, a Video Accreditation through Motion Signatures system. Vamos provides liveness verifications for videos of arbitrary length. Vamos is completely transparent to the users; it requires no special user interaction, nor change in user behavior. Instead of enforcing an initial verification step, Vamos uses the entire video and acceleration stream for verification purposes (see illustration below). It divides the video and acceleration data into fixed length chunks. It then classifies each chunk and uses the results, along with a suite of novel features that we introduce, to classify the entire sample. Vamos does not impose a dominant motion direction, thus, does not constrain the user movements. Instead, Vamos verifies the liveness of the video by extracting features from all the directions of movement, from both the video and acceleration streams.

Applications Liveness verifications are a cornerstone in a variety of practical applications that use the mobile device camera as a trusted witness. The examples below discuss several application scenarios:

- Citizen journalism. Our solutions can be used in conjunction with trusted location and time verification solutions to verify claims made by video uploaders.

- Smarter cities, Mobile 311. Mobile 311 apps by municipalities and metropolitan governments tap into crowd-sourced reporting of potholes and open manholes for city maintenance, and to avoid possible hazards. To gauge the correctness and severity of a case, the systems require multiple users to report the same case before dispatching a crew. The shortcoming is that, the system is set to wait for multiple complaints to come in before action, and may even be accused of malpractice due to inaction even in the presence of information. Our solutions can act as the required witness to the genuine-ness of the reported case, and can eliminate the need to wait for multiple reports.

- Mobile authentication. The cameras of mobile devices (e.g., smartphones) can be used to implement a seamless and continuous face-recognition based authentication procedure. Movee can be used to prevent an attacker from bypassing the procedure using a picture of the user of the smartphone.

- Payment verifications. Banks like Bank of America and online payment sites such as Paypal enable users to cash checks through their mobile devices: take a picture of the check and upload it to the provider's site. Providing this operation involves risks on the provider side, as users can try to deposit bad checks. Instead of relying on a picture of the check, Our approach requires users to take a movie of the check, from multiple angles. The movie ensures that the user has physical access to the payment device. Liveness verifications provide the assurance that the user took the movie with his mobile device.

- Tamper proof supply chain. Supply chains often rely on tamper evident seals, containing printed barcodes to assert location and time for an asset. Workers can use our solutions to capture a movie of the asset, along with its seals, and send it to remote verifiers. The liveness verifications, coupled with proofs of location and time of the movie, can provide assurances that the movie has not been plagiarized (e.g., a movie of a previously taken movie), and the asset is at the right place at the right time.

- Prototype and asset verification. Kickstarter requires that real prototypes are used in the promotion videos of campaigns. Movee and Vamos can be used by participants to verify the liveness of footage provided as evidence. Our solutions can also provide proof of ownership verifications to sellers on sites like Amazon and eBay.

Publications

Mahmudur Rahman, Mozhgan Azimpourkivi, Umut Topkara, Bogdan Carbunar.

IEEE Transactions on Mobile Computing (TMC), Volume 16, Issue 11, November 2017. [pdf]

Mahmudur Rahman, Umut Topkara, Bogdan Carbunar.

IEEE Transactions on Mobile Computing (TMC), Volume 15, Number 5, May 2016 [pdf]

Mahmudur Rahman, Mozhgan Azimpourkivi, Umut Topkara, Bogdan Carbunar.

In Proceedings of the 8th ACM Conference on Security and Privacy in Wireless and Mobile Networks (WiSeC) [acceptance rate=19%], New York City, June 2015. [pdf]

Mahmudur Rahman, Umut Topkara, Bogdan Carbunar.

In Proceedings of the 29th Annual Computer Security Applications Conference (ACSAC) [acceptance rate=19%], New Orleans, December 2013. [pdf]